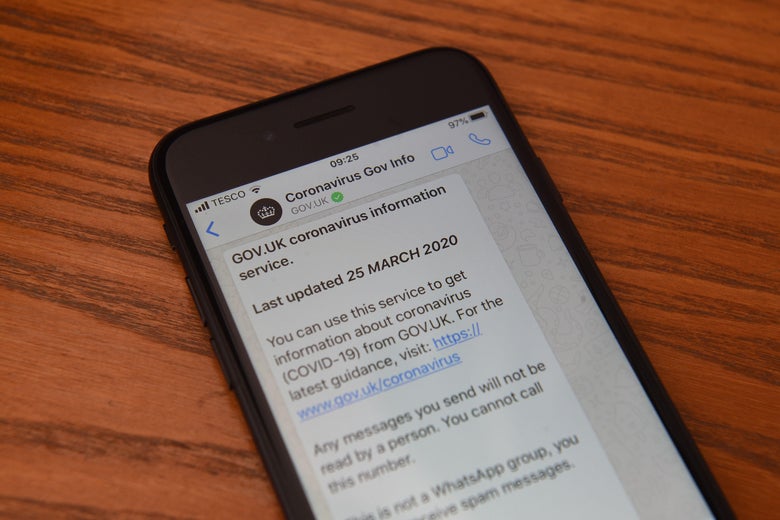

A communication from the British government’s Coronavirus Information Service on WhatsApp displayed on a smartphone on March 25

Oli Scarff/Getty Images

On Tuesday, WhatsApp announced that it will impose strict limits on forwarding messages. It’s part of the messaging service’s push to slow the spread of false information about the coronavirus. (WhatsApp has been called a “petri dish” of coronavirus misinformation.) Now, it is only permitting users to send frequently forwarded messages—ones that have been sent through a chain of more than five people—to just one chat at a time. WhatsApp won’t be restricting how many people a user can send these forwarded messages to consecutively, but the new measure makes the process of mass forwarding slow and inconvenient. The goal is for monotony to become a barrier to viral content.

“[W]e’ve seen a significant increase in the amount of forwarding which users have told us can feel overwhelming and can contribute to the spread of misinformation,” WhatsApp said in a blog post. “We believe it’s important to slow the spread of these messages down to keep WhatsApp a place for personal conversation.”

This is the latest step in WhatsApp’s gradual campaign to reduce the dissemination of fake information. In 2018, the company began labelling forwarded messages and limiting how many chats to which a user could forward a message. The company has since started marking frequently forwarded messages with a double arrow icon and reduced the number of chats a user can forward a message to to five. These measures slowed the rate of forwarding in the app globally by 25 percent, the company said.

Although WhatsApp hasn’t directly framed its new policy as a way to combat coronavirus misinformation, it comes at a time when the service, among other social media platforms, is facing scrutiny for its role in spreading false claims about COVID-19. This year, the U.S. has seen the focus of misinformation campaigns shift from content about the presidential election to content about the pandemic. (Remember that edited clip of Joe Biden that both Twitter and Facebook labeled as manipulated media?) In the past few weeks alone, WhatsApp has facilitated the spread of the viral 5G conspiracy theory, which argues that new 5G mobile networks have caused the pandemic. It’s also a hotspot for fake cures, such as drinking warm water every 15 minutes.

Limiting the means of spreading information—rather than the content itself—is one of the few ways for a messaging service with end-to-end encryption to try to extinguish the wildfire of fake news on its platform. Unlike its parent company, Facebook, WhatsApp can’t flag or remove misleading information, since moderators aren’t privy to the secure content users share with one another.

But this isn’t the only way WhatsApp has attempted to clamp down on hoaxes and rumors amid the pandemic. The company has created a Coronavirus Information Hub and donated $1 million to the International Fact Checking Network to support reporting on COVID-19 rumors. It’s also partnered with the World Health Organization to launch a bot called the WHO Health Alert. The bot, which provides official COVID-19 information and advice and aims to debunk coronavirus myths, has already been used by more than 10 million users.

Future Tense is a partnership of Slate, New America, and Arizona State University that examines emerging technologies, public policy, and society.

from Slate Magazine https://ift.tt/34tfYtu

via IFTTT

沒有留言:

張貼留言